REPO Cleaned.

This commit is contained in:

parent

16fad834a1

commit

d73164bced

@ -1,52 +0,0 @@

|

||||

-----BEGIN PGP PUBLIC KEY BLOCK-----

|

||||

|

||||

mQSuBFpgP9IRDAC5HFDj9beW/6THlCHMPmjSCUeT0lKtT22uHbTA5CpZFTRvrjF8

|

||||

l1QFpECuax2LiQUWCg2rl5LZtjE2BL53uNhPagGiUOnMC7w50i3YD/KWoanM9or4

|

||||

8uNmkYRp7pgnjQKX+NK9TWJmLE94UMUgCUach+WXRG4ito/mc2U2A37Lonokpjb2

|

||||

hnc3d2wSESg+N0Am91TNSiEo80/JVRcKlttyEHJo6FE1sW5Ll84hW8QeROwYa/kU

|

||||

N8/jAAVTUc2KzMKknlVlGYRcfNframwCu2xUMlyX5Ghjrr3PmLgQX3qc3k/eTwAr

|

||||

fHifdvZnsBTquLuOxFHk0xlvdSyoGeX3F0LKAXw1+Y6uyX9v7F4Ap7vEGsuCWfNW

|

||||

hNIayxIM8iOeb6AOFQycL/GkI0Mv+SCd/8KqdAHT8FWjsJUnOWcYYKvFdN5jcORw

|

||||

C6OVxf296Sj1Zrti6XVQv63/iaJ9at142AcVwbnvaR2h5IqyXdmzmszmoYVvf7jG

|

||||

JVsmkwTrRvIgyMcBAOLrwQ7I4JGlL54nKr1mIvGRLZ2lH/2sfM2QHcTgcCQ5DACi

|

||||

P0wOKlt6UgRQ27Aeh0LtOuFuZReXE8dIpD8f6l+zLS5Kii1SB1yffeSsQbTD6bvt

|

||||

Ic6h88iUKypNHiFcFNncyad6f4zFYPB1ULXyFoZcpPo3jKjwNW/h//AymgfbqFUa

|

||||

4dWgdVhdkSKB1BzSMamxKSv9O87Q/Zc2vTcA/0j9RjPsrRIfOCziob+kIcpuylA9

|

||||

a71R9dJ7r2ivwvdOK2De/VHkEanM8qyPgmxdD03jLsx159fX7B9ItSdxg5i0K9sV

|

||||

6mgfyGiHETminsW28f36O/WMH0SUnwjdG2eGJsZE2IOS/BqTXHRXQeFVR4b44Ubg

|

||||

U9h8moORPxc1+/0IFN2Bq4AiLQZ9meCtTmCe3QHOWbKRZ3JydMpoohdU3l96ESXl

|

||||

hNpD6C+froqQgemID51xe3iPRY947oXjeTD87AHDBcLD/vwE6Ys2Vi9mD5bXwoym

|

||||

hrXCIh+v823HsJSQiN8QUDFfIMIgbATNemJTXs84EnWwBGLozvmuUvpVWXZSstcL

|

||||

/ROivKTKRkTYqVZ+sX/yXzQM5Rp2LPF13JDeeATwrgTR9j8LSiycOOFcp3n+ndvy

|

||||

tNg+GQAKYC5NZWL/OrrqRuFmjWkZu0234qZIFd0/oUQ5tqDGwy84L9f6PGPvshTR

|

||||

yT6B4FpOqvPt10OQFfpD/h9ocFguNBw0AELjXUHk89bnBTU5cKGLkb1iOnGwtAgJ

|

||||

mV6MJRjS/TKL6Ne2ddiv46fXlY05zJfg0ZHehe49BIZXQK8/9h5YJGmtcUZP19+6

|

||||

xPTF5zXWs0k3yzoTGP2iCW/Ksf6b0t0fIIASGFAhQJUmGW1lKAcZTTt425G3NYOc

|

||||

jmhJaFzcLpTnoqB8RKOTUzWXESXmA86cq4DtyQ2yzeLKBkroRGdpwvpZLH3MeDJ4

|

||||

EIWSmcKPxm8oafMk6Ni9I4qQLFeSTHcF2qFoBMLKai1lqLd+NAzQmbXHDw6gOac8

|

||||

+DBfIcaj0f5AK/0G39dOV+pg29pISt2PWDDhZ/XsjetrqcrnhsqNNRyplmmy0xR0

|

||||

srQwQ2FwdGFpbiBEZXJvIChodHRwczovL2Rlcm8uaW8pIDxzdXBwb3J0QGRlcm8u

|

||||

aW8+iJAEExEIADgWIQQPOeQljGU5R3AqgjQIsgNgoDqd6AUCWmA/0gIbAwULCQgH

|

||||

AgYVCAkKCwIEFgIDAQIeAQIXgAAKCRAIsgNgoDqd6FYnAQChtgDnzVwe28s6WDTK

|

||||

4bBa60dSZf1T08PCKl3+c3xx1QEA2R9K2CLQ6IsO9NXD5kA/pTQs5AxYc9bLo/eD

|

||||

CZSe/4u5Aw0EWmA/0hAMALjwoBe35jZ7blE9n5mg6e57H0Bri43dkGsQEQ1fNaDq

|

||||

7XByD0JAiZ20vrrfDsbXZQc+1SBGGOa38pGi6RKEf/q4krGe7EYx4hihHQuc+hco

|

||||

PqOs6rN3+hfHerUolKpYlkGOSxO1ZjpvMOPBF1hz0Bj9NoPMWwVb5fdWis2BzKAu

|

||||

GHFAX5Ls86KKZs19DRejWsdFtytEiqM7bAjUW75o3O24faxtByTa2SVmmkavCFS4

|

||||

BpjDhIU2d5RqhJRkb9fqBU8MDFrmCQqSraQs/CqmOTYzM7E8wlk1SwylXN6yBFX3

|

||||

RAwq1koFMw8yRMVzswEy917kTHS4IyM2yfYjbnENmWJuHiYJmgn8Lqw1QA3syIfP

|

||||

E4qpzGBTBq3YXXOSymsNKZmKH0rK/G0l3p33rIagl5UXfr1LVd5XJRu6BzjKuk+q

|

||||

uL3zb6d0ZSaT+aQ/Sju3shhWjGdCRVoT1shvBbQeyEU5ZLe5by6sp0FH9As3hRkN

|

||||

0PDALEkhgQwl5hU8aIkwewADBQv/Xt31aVh+k/l+CwThAt9rMCDf2PQl0FKDH0pd

|

||||

7Tcg1LgbqM20sF62PeLpRq+9iMe/pD/rNDEq94ANnCoqC5yyZvxganjG2Sxryzwc

|

||||

jseZeq3t/He8vhiDxs3WwFbJSylzPG3u9xgyGkKDfGA74Iu+ASPOPOEOT4oLjI5E

|

||||

s/tB7muD8l/lpkWij2BOopiZzieQntn8xW8eCFTocSAjZW52SoI1x/gw3NasILoB

|

||||

nrTy0yOYlM01ucZOTB/0JKpzidkJg336amZdF4bLkfUPyCTE6kzG0PrLrQSeycr4

|

||||

jkDfWfuFmRhKD2lDtoWDHqiPfe9IJkcTMnp5XfXAG3V2pAc+Mer1WIYajuHieO8m

|

||||

oFNCzBc0obe9f+zEIBjoINco4FumxP78UZMzwe+hHrj8nFtju7WbKqGWumYH0L34

|

||||

47tUoWXkCZs9Ni9DUIBVYWzEobgS7pl/H1HLR36klfAHLut0T9PZgipKRjSx1Ljz

|

||||

M78wxVhupdDvHDEdKnq9E9lD6018iHgEGBEIACAWIQQPOeQljGU5R3AqgjQIsgNg

|

||||

oDqd6AUCWmA/0gIbDAAKCRAIsgNgoDqd6LTZAQDESAvVHbtyKTwMmrx88p6Ljmtp

|

||||

pKxKP0O5AFM7b7INbQEAtE3lAIBUA31x3fjC5L6UyGk/a2ssOWTsJx98YxMcPhs=

|

||||

=H4Qj

|

||||

-----END PGP PUBLIC KEY BLOCK-----

|

||||

46

Changelog.md

46

Changelog.md

@ -1,46 +0,0 @@

|

||||

### Welcome to the DEROHE Testnet

|

||||

[Explorer](https://testnetexplorer.dero.io) [Source](https://github.com/deroproject/derohe) [Twitter](https://twitter.com/DeroProject) [Discord](https://discord.gg/H95TJDp) [Wiki](https://wiki.dero.io) [Github](https://github.com/deroproject/derohe) [DERO CryptoNote Mainnet Stats](http://network.dero.io) [Mainnet WebWallet](https://wallet.dero.io/)

|

||||

|

||||

### DERO HE Changelog

|

||||

[From Wikipedia: ](https://en.wikipedia.org/wiki/Homomorphic_encryption)

|

||||

|

||||

###At this point in time, DERO blockchain has the first mover advantage in the following

|

||||

* Private SCs ( no one knows who owns what tokens and who is transferring to whom and how much is being transferred)

|

||||

* Homomorphic protocol

|

||||

* Ability to do instant sync (takes couple of seconds or minutes), depends on network bandwidth.

|

||||

* Ability to deliver encrypted license keys and other data.

|

||||

* Pruned chains are the core.

|

||||

* Ability to model 99.9% earth based financial model of the world.

|

||||

* Privacy by design, backed by crypto algorithms. Many years of research in place.

|

||||

|

||||

|

||||

###3.3

|

||||

* Private SCs are now supported. (90% completed).

|

||||

* Sample Token contract is available with guide.

|

||||

* Multi-send is now possible. sending to multiple destination per tx

|

||||

* Few more ideas implemented and will be tested for review in upcoming technology preview.

|

||||

|

||||

###3.2

|

||||

* Open SCs are now supported

|

||||

* Private SCs which have their balance encrypted at all times (under implementation)

|

||||

* SCs can now update themselves. however, new code will only run on next invocation

|

||||

* Multi Send is under implementation.

|

||||

|

||||

###3.1

|

||||

* TX now have significant savings of around 31 * ringsize bytes for every tx

|

||||

* Daemon now supports pruned chains.

|

||||

* Daemon by default bootstraps a pruned chain.

|

||||

* Daemon currently syncs full node by using --fullnode option.

|

||||

* P2P has been rewritten for various improvements and easier understanding of state machine

|

||||

* Address specification now enables to embed various RPC parameters for easier transaction

|

||||

* DERO blockchain represents transaction finality in a couple of blocks (less than 1 minute), unlike other blockchains.

|

||||

* Proving and parsing of embedded data is now available in explorer.

|

||||

* Senders/Receivers both have proofs which confirm data sent on execution.

|

||||

* All tx now have inbuilt space of 144 bytes for user defined data

|

||||

* User defined space has inbuilt RPC which can be used to implement most practical use-cases.All user defined data is encrypted.

|

||||

* The model currrently defines data on chain while execution is referred to wallet extensions. A dummy example of pongserver extension showcases how to enable purchases/delivery of license keys/information privately.

|

||||

* Burn transactions which burn value are now working.

|

||||

|

||||

###3.0

|

||||

* DERO HE implemented

|

||||

|

||||

90

LICENSE

90

LICENSE

@ -1,90 +0,0 @@

|

||||

RESEARCH LICENSE

|

||||

|

||||

|

||||

Version 1.1.2

|

||||

|

||||

I. DEFINITIONS.

|

||||

|

||||

"Licensee " means You and any other party that has entered into and has in effect a version of this License.

|

||||

|

||||

“Licensor” means DERO PROJECT(GPG: 0F39 E425 8C65 3947 702A 8234 08B2 0360 A03A 9DE8) and its successors and assignees.

|

||||

|

||||

"Modifications" means any (a) change or addition to the Technology or (b) new source or object code implementing any portion of the Technology.

|

||||

|

||||

"Research Use" means research, evaluation, or development for the purpose of advancing knowledge, teaching, learning, or customizing the Technology for personal use. Research Use expressly excludes use or distribution for direct or indirect commercial (including strategic) gain or advantage.

|

||||

|

||||

"Technology" means the source code, object code and specifications of the technology made available by Licensor pursuant to this License.

|

||||

|

||||

"Technology Site" means the website designated by Licensor for accessing the Technology.

|

||||

|

||||

"You" means the individual executing this License or the legal entity or entities represented by the individual executing this License.

|

||||

|

||||

II. PURPOSE.

|

||||

|

||||

Licensor is licensing the Technology under this Research License (the "License") to promote research, education, innovation, and development using the Technology.

|

||||

|

||||

COMMERCIAL USE AND DISTRIBUTION OF TECHNOLOGY AND MODIFICATIONS IS PERMITTED ONLY UNDER AN APPROPRIATE COMMERCIAL USE LICENSE AVAILABLE FROM LICENSOR AT <url>.

|

||||

|

||||

III. RESEARCH USE RIGHTS.

|

||||

|

||||

A. Subject to the conditions contained herein, Licensor grants to You a non-exclusive, non-transferable, worldwide, and royalty-free license to do the following for Your Research Use only:

|

||||

|

||||

1. reproduce, create Modifications of, and use the Technology alone, or with Modifications;

|

||||

2. share source code of the Technology alone, or with Modifications, with other Licensees;

|

||||

|

||||

3. distribute object code of the Technology, alone, or with Modifications, to any third parties for Research Use only, under a license of Your choice that is consistent with this License; and

|

||||

|

||||

4. publish papers and books discussing the Technology which may include relevant excerpts that do not in the aggregate constitute a significant portion of the Technology.

|

||||

|

||||

B. Residual Rights. You may use any information in intangible form that you remember after accessing the Technology, except when such use violates Licensor's copyrights or patent rights.

|

||||

|

||||

C. No Implied Licenses. Other than the rights granted herein, Licensor retains all rights, title, and interest in Technology , and You retain all rights, title, and interest in Your Modifications and associated specifications, subject to the terms of this License.

|

||||

|

||||

D. Open Source Licenses. Portions of the Technology may be provided with notices and open source licenses from open source communities and third parties that govern the use of those portions, and any licenses granted hereunder do not alter any rights and obligations you may have under such open source licenses, however, the disclaimer of warranty and limitation of liability provisions in this License will apply to all Technology in this distribution.

|

||||

|

||||

IV. INTELLECTUAL PROPERTY REQUIREMENTS

|

||||

|

||||

As a condition to Your License, You agree to comply with the following restrictions and responsibilities:

|

||||

|

||||

A. License and Copyright Notices. You must include a copy of this License in a Readme file for any Technology or Modifications you distribute. You must also include the following statement, "Use and distribution of this technology is subject to the Java Research License included herein", (a) once prominently in the source code tree and/or specifications for Your source code distributions, and (b) once in the same file as Your copyright or proprietary notices for Your binary code distributions. You must cause any files containing Your Modification to carry prominent notice stating that You changed the files. You must not remove or alter any copyright or other proprietary notices in the Technology.

|

||||

|

||||

B. Licensee Exchanges. Any Technology and Modifications You receive from any Licensee are governed by this License.

|

||||

|

||||

V. GENERAL TERMS.

|

||||

|

||||

A. Disclaimer Of Warranties.

|

||||

|

||||

TECHNOLOGY IS PROVIDED "AS IS", WITHOUT WARRANTIES OF ANY KIND, EITHER EXPRESS OR IMPLIED INCLUDING, WITHOUT LIMITATION, WARRANTIES THAT ANY SUCH TECHNOLOGY IS FREE OF DEFECTS, MERCHANTABLE, FIT FOR A PARTICULAR PURPOSE, OR NON-INFRINGING OF THIRD PARTY RIGHTS. YOU AGREE THAT YOU BEAR THE ENTIRE RISK IN CONNECTION WITH YOUR USE AND DISTRIBUTION OF ANY AND ALL TECHNOLOGY UNDER THIS LICENSE.

|

||||

|

||||

B. Infringement; Limitation Of Liability.

|

||||

|

||||

1. If any portion of, or functionality implemented by, the Technology becomes the subject of a claim or threatened claim of infringement ("Affected Materials"), Licensor may, in its unrestricted discretion, suspend Your rights to use and distribute the Affected Materials under this License. Such suspension of rights will be effective immediately upon Licensor's posting of notice of suspension on the Technology Site.

|

||||

|

||||

2. IN NO EVENT WILL LICENSOR BE LIABLE FOR ANY DIRECT, INDIRECT, PUNITIVE, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES IN CONNECTION WITH OR ARISING OUT OF THIS LICENSE (INCLUDING, WITHOUT LIMITATION, LOSS OF PROFITS, USE, DATA, OR ECONOMIC ADVANTAGE OF ANY SORT), HOWEVER IT ARISES AND ON ANY THEORY OF LIABILITY (including negligence), WHETHER OR NOT LICENSOR HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. LIABILITY UNDER THIS SECTION V.B.2 SHALL BE SO LIMITED AND EXCLUDED, NOTWITHSTANDING FAILURE OF THE ESSENTIAL PURPOSE OF ANY REMEDY.

|

||||

|

||||

C. Termination.

|

||||

|

||||

1. You may terminate this License at any time by notifying Licensor in writing.

|

||||

|

||||

2. All Your rights will terminate under this License if You fail to comply with any of its material terms or conditions and do not cure such failure within thirty (30) days after becoming aware of such noncompliance.

|

||||

|

||||

3. Upon termination, You must discontinue all uses and distribution of the Technology , and all provisions of this Section V shall survive termination.

|

||||

|

||||

D. Miscellaneous.

|

||||

|

||||

1. Trademark. You agree to comply with Licensor's Trademark & Logo Usage Requirements, if any and as modified from time to time, available at the Technology Site. Except as expressly provided in this License, You are granted no rights in or to any Licensor's trademarks now or hereafter used or licensed by Licensor.

|

||||

|

||||

2. Integration. This License represents the complete agreement of the parties concerning the subject matter hereof.

|

||||

|

||||

3. Severability. If any provision of this License is held unenforceable, such provision shall be reformed to the extent necessary to make it enforceable unless to do so would defeat the intent of the parties, in which case, this License shall terminate.

|

||||

|

||||

4. Governing Law. This License is governed by the laws of the United States and the State of California, as applied to contracts entered into and performed in California between California residents. In no event shall this License be construed against the drafter.

|

||||

|

||||

5. Export Control. You agree to comply with the U.S. export controlsand trade laws of other countries that apply to Technology and Modifications.

|

||||

|

||||

READ ALL THE TERMS OF THIS LICENSE CAREFULLY BEFORE ACCEPTING.

|

||||

|

||||

BY CLICKING ON THE YES BUTTON BELOW OR USING THE TECHNOLOGY, YOU ARE ACCEPTING AND AGREEING TO ABIDE BY THE TERMS AND CONDITIONS OF THIS LICENSE. YOU MUST BE AT LEAST 18 YEARS OF AGE AND OTHERWISE COMPETENT TO ENTER INTO CONTRACTS.

|

||||

|

||||

IF YOU DO NOT MEET THESE CRITERIA, OR YOU DO NOT AGREE TO ANY OF THE TERMS OF THIS LICENSE, DO NOT USE THIS SOFTWARE IN ANY FORM.

|

||||

|

||||

237

Readme.md

237

Readme.md

@ -1,239 +1,4 @@

|

||||

### Welcome to the DEROHE Testnet

|

||||

[Explorer](https://testnetexplorer.dero.io) [Source](https://github.com/deroproject/derohe) [Twitter](https://twitter.com/DeroProject) [Discord](https://discord.gg/H95TJDp) [Wiki](https://wiki.dero.io) [Github](https://github.com/deroproject/derohe) [DERO CryptoNote Mainnet Stats](http://network.dero.io) [Mainnet WebWallet](https://wallet.dero.io/)

|

||||

|

||||

### DERO HE [ DERO Homomorphic Encryption]

|

||||

[From Wikipedia: ](https://en.wikipedia.org/wiki/Homomorphic_encryption)

|

||||

|

||||

**Homomorphic encryption is a form of encryption allowing one to perform calculations on encrypted data without decrypting it first. The result of the computation is in an encrypted form, when decrypted the output is the same as if the operations had been performed on the unencrypted data.**

|

||||

|

||||

Homomorphic encryption can be used for privacy-preserving outsourced storage and computation. This allows data to be encrypted and out-sourced to commercial cloud environments for processing, all while encrypted. In highly regulated industries, such as health care, homomorphic encryption can be used to enable new services by removing privacy barriers inhibiting data sharing. For example, predictive analytics in health care can be hard to apply via a third party service provider due to medical data privacy concerns, but if the predictive analytics service provider can operate on encrypted data instead, these privacy concerns are diminished.

|

||||

|

||||

**DERO is pleased to announce release of DERO Homomorphic Encryption Protocol testnet.**

|

||||

DERO will migrate from exisiting CryptoNote Protocol to it's own DERO Homomorphic Encryption Blockchain Protocol(DHEBP).

|

||||

REPO cleaned here.

|

||||

|

||||

|

||||

### Table of Contents [DEROHE]

|

||||

1. [ABOUT DERO PROJECT](#about-dero-project)

|

||||

1. [DERO HE Features](#dero-he-features)

|

||||

1. [DERO HE TX Sizes](#dero-he-tx-sizes)

|

||||

1. [DERO Crypto](#dero-crypto)

|

||||

1. [DERO HE PORTS](#dero-he-ports)

|

||||

1. [Technical](#technical)

|

||||

1. [DERO blockchain salient features](#dero-blockchain-salient-features)

|

||||

1. [DERO Innovations](#dero-innovations)

|

||||

1. [Dero DAG](#dero-dag)

|

||||

1. [Client Protocol](#client-protocol)

|

||||

1. [Dero Rocket Bulletproofs](#dero-rocket-bulletproofs)

|

||||

1. [51% Attack Resistant](#51-attack-resistant)

|

||||

1. [DERO Mining](#dero-mining)

|

||||

1. [DERO Installation](#dero-installation)

|

||||

1. [Installation From Source](#installation-from-source)

|

||||

1. [Installation From Binary](#installation-from-binary)

|

||||

1. [Next Step After DERO Installation](#next-step-after-dero-installation)

|

||||

1. [Running DERO Daemon](#running-dero-daemon)

|

||||

1. [Running DERO wallet](#running-dero-wallet)

|

||||

1. [DERO Cmdline Wallet](#dero-cmdline-wallet)

|

||||

1. [DERO WebWallet](#dero-web-wallet)

|

||||

1. [DERO Gui Wallet ](#dero-gui-wallet)

|

||||

1. [DERO Explorer](#dero-explorer)

|

||||

1. [Proving DERO Transactions](#proving-dero-transactions)

|

||||

|

||||

#### ABOUT DERO PROJECT

|

||||

[DERO](https://github.com/deroproject/derosuite) is decentralized DAG(Directed Acyclic Graph) based blockchain with enhanced reliability, privacy, security, and usability. Consensus algorithm is PoW based on [DERO AstroBWT: ASIC/FPGA/GPU resistant CPU mining algorithm ](https://github.com/deroproject/astrobwt). DERO is industry leading and the first blockchain to have bulletproofs, TLS encrypted Network.

|

||||

DERO is the first crypto project to combine a Proof of Work blockchain with a DAG block structure and fully anonymous transactions based on [Homomorphic Encryption](https://en.wikipedia.org/wiki/Homomorphic_encryption). The fully distributed ledger processes transactions with a sixty-seconds average block time and is secure against majority hashrate attacks. DERO will be the first Homomorphic Encryption based blockchain to have smart contracts on its native chain without any extra layers or secondary blockchains. At present DERO has Smart Contracts on old CryptoNote protocol [testnet](https://github.com/deroproject/documentation/blob/master/testnet/stargate.md).

|

||||

|

||||

#### DERO HE Features

|

||||

1. **Homomorphic account based model** [First privacy chain to have this.](Check blockchain/transaction_execute.go line 82-95).

|

||||

2. Instant account balances[ Need to get 66 bytes of data only from the blockchain].

|

||||

3. No more chain scanning or wallet scanning to detect funds, no key images etc.

|

||||

4. Truly light weight and efficient wallets.

|

||||

5. Fixed per account cost of 66 bytes in blockchain[Immense scalability].

|

||||

6. Perfectly anonymous transactions with many-out-of-many proofs [bulletproofs and sigma protocol]

|

||||

7. Deniability

|

||||

8. Fixed transaction size say ~2.5KB (ring size 8) or ~3.4 KB (ring size 16) etc based on chosen anonymity group size[ logarithmic growth]

|

||||

9. Anonymity group can be chosen in powers of 2.

|

||||

10. Allows homomorphic assets ( programmable SCs with fixed overhead per asset ), with open Smart Contract but encrypted data [Internal testing/implementation not on this current testnet branch].

|

||||

11. Allows open assets ( programmable SCs with fixed overhead per asset ) [Internal testing/implementation not on this current testnet branch]

|

||||

12. Allows chain pruning on daemons to control growth of data on daemons.

|

||||

13. Transaction generation takes less than 25 ms.

|

||||

14. Transaction verification takes even less than 25ms time.

|

||||

15. No trusted setup, no hidden parameters.

|

||||

16. Pruning chain/history for immense scalibility[while still secured using merkle proofs].

|

||||

17. Example disk requirements of 1 billion accounts ( assumming it does not want to keep history of transactions, but keeps proofs to prove that the node is in sync with all other nodes)

|

||||

```

|

||||

Requirement of 1 account = 66 bytes

|

||||

Assumming storage overhead per account of 128 bytes ( constant )

|

||||

Total requirements = (66 + 128)GB ~ 200GB

|

||||

Assuming we are off by factor of 4 = 800GB

|

||||

```

|

||||

18. Note that, Even after 1 trillion transactions, 1 billion accounts will consume 800GB only, If history is not maintained, and everything still will be in proved state using merkle roots.

|

||||

And so, Even Raspberry Pi can host the entire chain.

|

||||

18. Senders can prove to receiver what amount they have send (without revealing themselves).

|

||||

19. Entire chain is rsyncable while in operation.

|

||||

20. Testnet released with source code.

|

||||

|

||||

#### DERO HE TX Sizes

|

||||

| Ring Size | DEROHE TX Size |

|

||||

| -------- | -------- |

|

||||

| 2 | 1553 bytes |

|

||||

| 4 | 2013 bytes |

|

||||

| 8 | 2605 bytes |

|

||||

| 16 | 3461 bytes |

|

||||

| 32 | 4825 bytes |

|

||||

| 64 | 7285 bytes |

|

||||

| 128 | 11839 bytes |

|

||||

| 512 | ~35000 bytes |

|

||||

|

||||

**NB:** Plan to reduce TX sizes further.

|

||||

|

||||

|

||||

#### DERO Crypto

|

||||

Secure and fast crypto is the basic necessity of this project and adequate amount of time has been devoted to develop/study/implement/audit it. Most of the crypto such as ring signatures have been studied by various researchers and are in production by number of projects. As far as the Bulletproofs are considered, since DERO is the first one to implement/deploy, they have been given a more detailed look. First, a bare bones bulletproofs was implemented, then implementations in development were studied (Benedict Bunz,XMR, Dalek Bulletproofs) and thus improving our own implementation.

|

||||

Some new improvements were discovered and implemented (There are number of other improvements which are not explained here). Major improvements are in the Double-Base Double-Scalar Multiplication while validating bulletproofs. A typical bulletproof takes ~15-17 ms to verify. Optimised bulletproofs takes ~1 to ~2 ms(simple bulletproof, no aggregate/batching). Since, in the case of bulletproofs the bases are fixed, we can use precompute table to convert 64*2 Base Scalar multiplication into doublings and additions (NOTE: We do not use Bos-Coster/Pippienger methods). This time can be again easily decreased to .5 ms with some more optimizations. With batching and aggregation, 5000 range-proofs (~2500 TX) can be easily verified on even a laptop. The implementation for bulletproofs is in github.com/deroproject/derosuite/crypto/ringct/bulletproof.go , optimized version is in github.com/deroproject/derosuite/crypto/ringct/bulletproof_ultrafast.go

|

||||

|

||||

There are other optimizations such as base-scalar multiplication could be done in less than a microsecond. Some of these optimizations are not yet deployed and may be deployed at a later stage.

|

||||

|

||||

|

||||

#### DEROHE PORTS

|

||||

**Mainnet:**

|

||||

P2P Default Port: 10101

|

||||

RPC Default Port: 10102

|

||||

Wallet RPC Default Port: 10103

|

||||

|

||||

**Testnet:**

|

||||

P2P Default Port: 40401

|

||||

RPC Default Port: 40402

|

||||

Wallet RPC Default Port: 40403

|

||||

|

||||

#### Technical

|

||||

For specific details of current DERO core (daemon) implementation and capabilities, see below:

|

||||

|

||||

1. **DAG:** No orphan blocks, No soft-forks.

|

||||

2. **BulletProofs:** Zero Knowledge range-proofs(NIZK)

|

||||

3. **AstroBWT:** This is memory-bound algorithm. This provides assurance that all miners are equal. ( No miner has any advantage over common miners).

|

||||

4. **P2P Protocol:** This layers controls exchange of blocks, transactions and blockchain itself.

|

||||

5. **Pederson Commitment:** (Part of ring confidential transactions): Pederson commitment algorithm is a cryptographic primitive that allows user to commit to a chosen value while keeping it hidden to others. Pederson commitment is used to hide all amounts without revealing the actual amount. It is a homomorphic commitment scheme.

|

||||

6. **Homomorphic Encryption:** Homomorphic Encryption is used to to do operations such as addition/substraction to settle balances with data being always encrypted (Balances are never decrypted before/during/after operations in any form.).

|

||||

7. **Homomorphic Ring Confidential Transactions:** Gives untraceability , privacy and fungibility while making sure that the system is stable and secure.

|

||||

8. **Core-Consensus Protocol implemented:** Consensus protocol serves 2 major purpose

|

||||

1. Protects the system from adversaries and protects it from forking and tampering.

|

||||

2. Next block in the chain is the one and only correct version of truth ( balances).

|

||||

9. **Proof-of-Work(PoW) algorithm:** PoW part of core consensus protocol which is used to cryptographically prove that X amount of work has been done to successfully find a block.

|

||||

10. **Difficulty algorithm**: Difficulty algorithm controls the system so as blocks are found roughly at the same speed, irrespective of the number and amount of mining power deployed.

|

||||

11. **Serialization/De-serialization of blocks**: Capability to encode/decode/process blocks .

|

||||

12. **Serialization/De-serialization of transactions**: Capability to encode/decode/process transactions.

|

||||

13. **Transaction validity and verification**: Any transactions flowing within the DERO network are validated,verified.

|

||||

14. **Socks proxy:** Socks proxy has been implemented and integrated within the daemon to decrease user identifiability and improve user anonymity.

|

||||

15. **Interactive daemon** can print blocks, txs, even entire blockchain from within the daemon

|

||||

16. **status, diff, print_bc, print_block, print_tx** and several other commands implemented

|

||||

17. GO DERO Daemon has both mainnet, testnet support.

|

||||

18. **Enhanced Reliability, Privacy, Security, Useability, Portabilty assured.**

|

||||

|

||||

|

||||

#### DERO blockchain salient features

|

||||

- [DAG Based: No orphan blocks, No soft-forks.](#dero-dag)

|

||||

- [51% Attack resistant.](#51-attack-resistant)

|

||||

- 60 Second Block time.

|

||||

- Extremely fast transactions with one minute/block confirmation time.

|

||||

- SSL/TLS P2P Network.

|

||||

- Homomorphic: Fully Encrypted Blockchain

|

||||

- [Dero Fastest Rocket BulletProofs](#dero-rocket-bulletproofs): Zero Knowledge range-proofs(NIZK).

|

||||

- Ring signatures.

|

||||

- Fully Auditable Supply.

|

||||

- DERO blockchain is written from scratch in Golang. [See all unique blockchains from scratch.](https://twitter.com/cryptic_monk/status/999227961059528704)

|

||||

- Developed and maintained by original developers.

|

||||

|

||||

#### DERO Innovations

|

||||

Following are DERO first and leading innovations.

|

||||

|

||||

#### DERO DAG

|

||||

DERO DAG implementation builds outs a main chain from the DAG network of blocks which refers to main blocks (100% reward) and side blocks (8% rewards).

|

||||

|

||||

|

||||

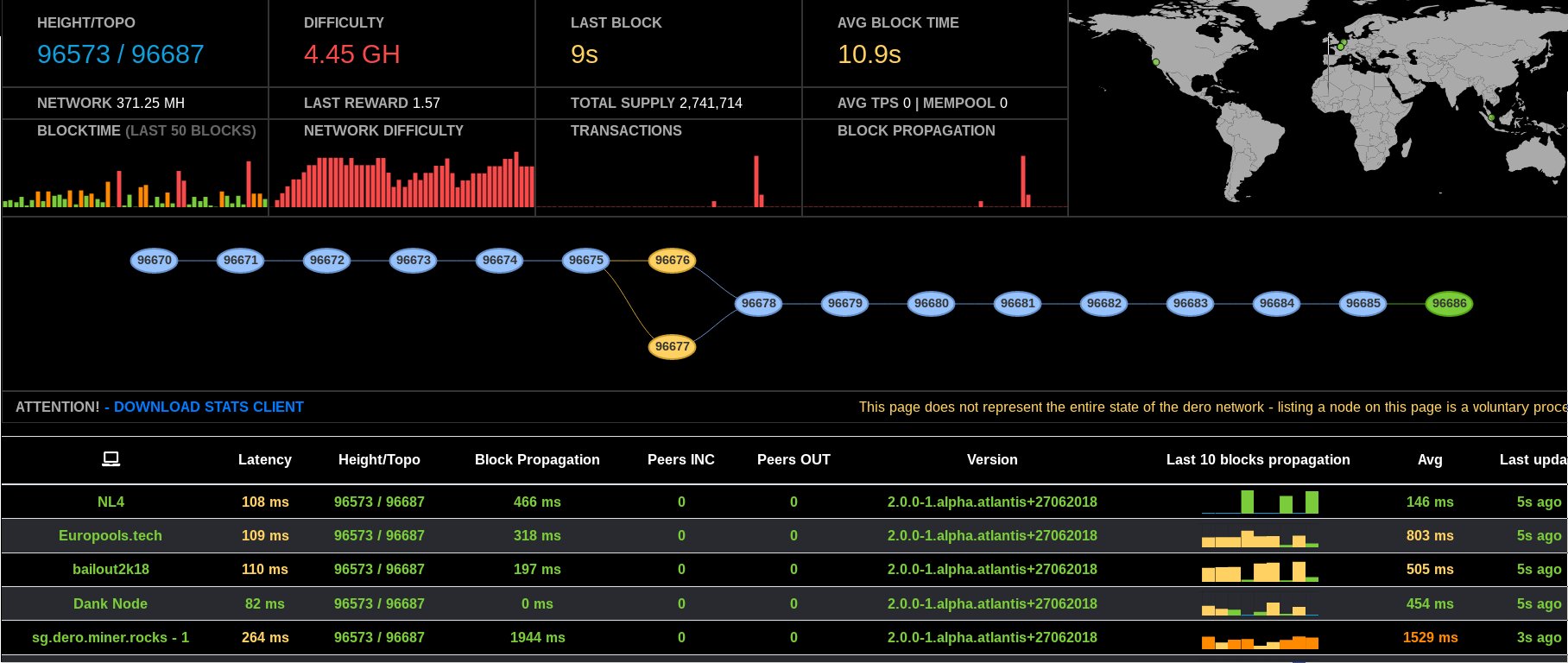

*DERO DAG Screenshot* [Live](https://stats.dero.io/)

|

||||

|

||||

|

||||

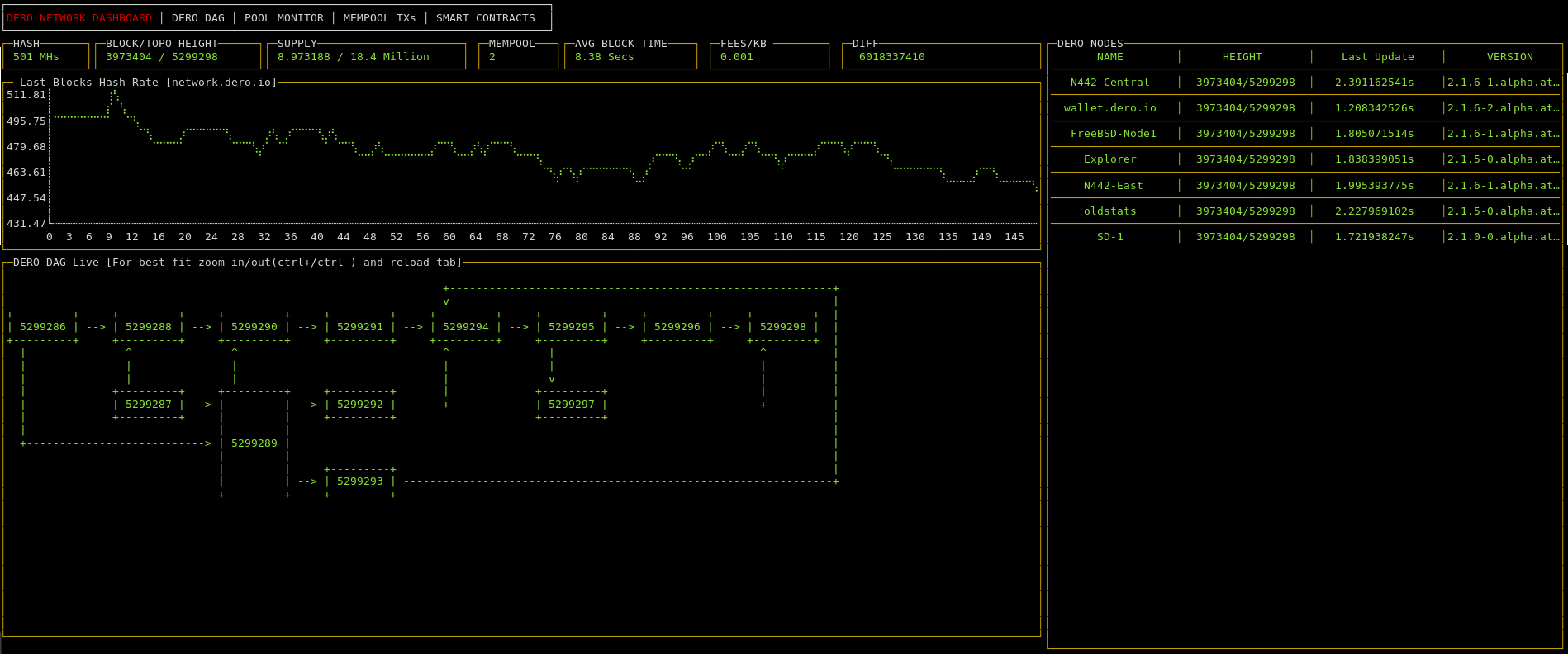

*DERO DAG Screenshot* [Live](https://network.dero.io/)

|

||||

|

||||

#### Client Protocol

|

||||

Traditional Blockchains process blocks as single unit of computation(if a double-spend tx occurs within the block, entire block is rejected). However DERO network accepts such blocks since DERO blockchain considers transaction as a single unit of computation.DERO blocks may contain duplicate or double-spend transactions which are filtered by client protocol and ignored by the network. DERO DAG processes transactions atomically one transaction at a time.

|

||||

|

||||

#### DERO Rocket Bulletproofs

|

||||

- Dero ultrafast bulletproofs optimization techniques in the form used did not exist anywhere in publicly available cryptography literature at the time of implementation. Please contact for any source/reference to include here if it exists. Ultrafast optimizations verifies Dero bulletproofs 10 times faster than other/original bulletproof implementations. See: https://github.com/deroproject/derosuite/blob/master/crypto/ringct/bulletproof_ultrafast.go

|

||||

|

||||

- DERO rocket bulletproof implementations are hardened, which protects DERO from certain class of attacks.

|

||||

|

||||

- DERO rocket bulletproof transactions structures are not compatible with other implementations.

|

||||

|

||||

Also there are several optimizations planned in near future in Dero rocket bulletproofs which will lead to several times performance boost. Presently they are under study for bugs, verifications, compatibilty etc.

|

||||

|

||||

#### 51% Attack Resistant

|

||||

DERO DAG implementation builds outs a main chain from the DAG network of blocks which refers to main blocks (100% reward) and side blocks (8% rewards). Side blocks contribute to chain PoW security and thus traditional 51% attacks are not possible on DERO network. If DERO network finds another block at the same height, instead of choosing one, DERO include both blocks. Thus, rendering the 51% attack futile.

|

||||

|

||||

#### DERO Mining

|

||||

[Mining](https://github.com/deroproject/wiki/wiki/Mining)

|

||||

|

||||

#### DERO Installation

|

||||

DERO is written in golang and very easy to install both from source and binary.

|

||||

#### Installation From Source

|

||||

1. Install Golang, Golang version 1.12.12 required.

|

||||

1. In go workspace: ```go get -u github.com/deroproject/derohe/...```

|

||||

1. Check go workspace bin folder for binaries.

|

||||

1. For example on Linux machine following binaries will be created:

|

||||

1. derod-linux-amd64 -> DERO daemon.

|

||||

1. dero-wallet-cli-linux-amd64 -> DERO cmdline wallet.

|

||||

1. explorer-linux-amd64 -> DERO Explorer. Yes, DERO has prebuilt personal explorer also for advance privacy users.

|

||||

|

||||

#### Installation From Binary

|

||||

Download [DERO binaries](https://github.com/deroproject/derosuite/releases) for ARM, INTEL, MAC platform and Windows, Mac, FreeBSD, OpenBSD, Linux etc. operating systems.

|

||||

Most users required following binaries:

|

||||

[Windows 7-10, Server 64bit/amd64 ](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_windows_amd64_2.1.6-1.alpha.atlantis.07032019.zip)

|

||||

[Windows 32bit/x86/386](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_windows_x86_2.1.6-1.alpha.atlantis.07032019.zip)

|

||||

[Linux 64bit/amd64](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_linux_amd64_2.1.6-1.alpha.atlantis.07032019.tar.gz)

|

||||

[Linux 32bit/x86](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_linux_386_2.1.6-1.alpha.atlantis.07032019.tar.gz)

|

||||

[FreeBSD 64bit/amd64](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_freebsd_amd64_2.1.6-1.alpha.atlantis.07032019.tar.gz)

|

||||

[OpenBSD 64bit/amd64](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_openbsd_amd64_2.1.6-1.alpha.atlantis.07032019.tar.gz)

|

||||

[Mac OS](https://github.com/deroproject/derosuite/releases/download/v2.1.6-1/dero_apple_mac_darwin_amd64_2.1.6-1.alpha.atlantis.07032019.tar.gz)

|

||||

Contact for support of other hardware and OS.

|

||||

|

||||

#### Next Step After DERO Installation

|

||||

Running DERO daemon supports DERO network and shows your support to privacy.

|

||||

|

||||

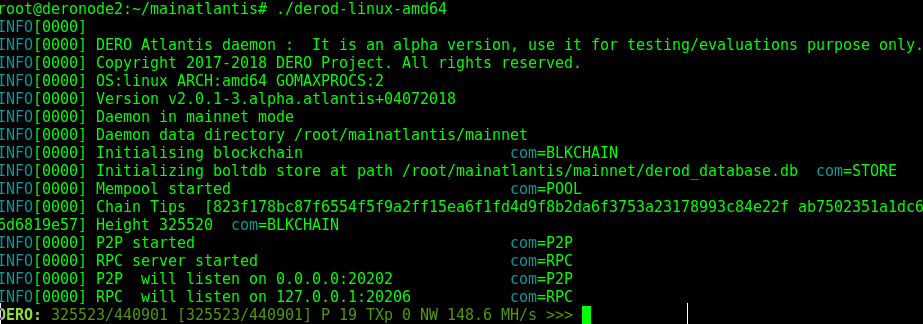

#### Running DERO Daemon

|

||||

Run derod.exe or derod-linux-amd64 depending on your operating system. It will start syncing.

|

||||

1. DERO daemon core cryptography is highly optimized and fast.

|

||||

1. Use dedicated machine and SSD for best results.

|

||||

1. VPS with 2-4 Cores, 4GB RAM,15GB disk is recommended.

|

||||

|

||||

|

||||

*DERO Daemon Screenshot*

|

||||

|

||||

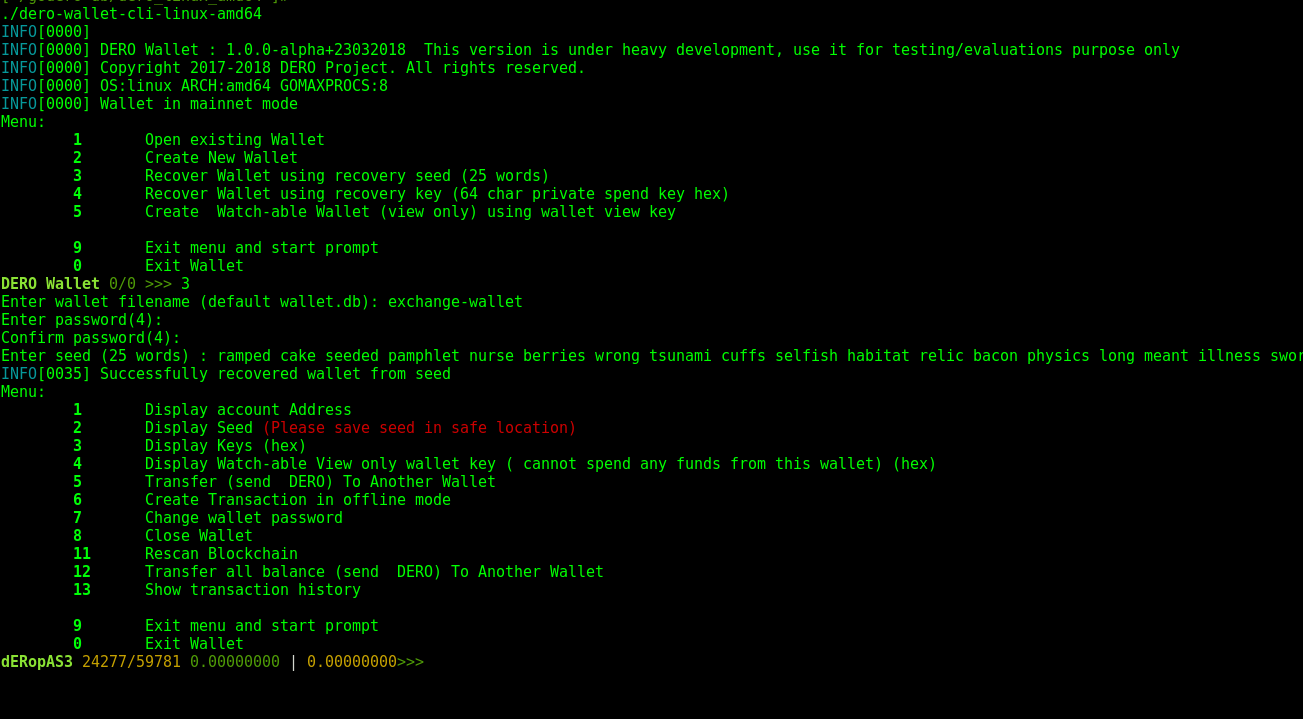

#### Running DERO Wallet

|

||||

Dero cmdline wallet is most reliable and has support of all functions. Cmdline wallet is most secure and reliable.

|

||||

|

||||

#### DERO Cmdline Wallet

|

||||

DERO cmdline wallet is menu based and very easy to operate.

|

||||

Use various options to create, recover, transfer balance etc.

|

||||

**NOTE:** DERO cmdline wallet by default connects DERO daemon running on local machine on port 20206.

|

||||

If DERO daemon is not running start DERO wallet with --remote option like following:

|

||||

**./dero-wallet-cli-linux-amd64 --remote**

|

||||

|

||||

|

||||

*DERO Cmdline Wallet Screenshot*

|

||||

|

||||

|

||||

#### DERO Explorer

|

||||

[DERO Explorer](https://explorer.dero.io/) is used to check and confirm transaction on DERO Network.

|

||||

[DERO testnet Explorer](https://testnetexplorer.dero.io/) is used to check and confirm transaction on DERO Network.

|

||||

DERO users can run their own explorer on local machine and can [browse](http://127.0.0.1:8080) on local machine port 8080.

|

||||

|

||||

*DERO EXPLORER Screenshot*

|

||||

|

||||

#### Proving DERO Transactions

|

||||

DERO blockchain is completely private, so anyone cannot view, confirm, verify any other's wallet balance or any transactions.

|

||||

So to prove any transaction you require *TXID* and *deroproof*.

|

||||

deroproof can be obtained using get_tx_key command in dero-wallet-cli.

|

||||

Enter the *TXID* and *deroproof* in [DERO EXPLORER](https://testnetexplorer.dero.io)

|

||||

|

||||

*DERO Explorer Proving Transaction*

|

||||

|

||||

|

||||

30

Start.md

30

Start.md

@ -1,30 +0,0 @@

|

||||

1] ### DEROHE Installation, https://github.com/deroproject/derohe

|

||||

|

||||

DERO is written in golang and very easy to install both from source and binary.

|

||||

Installation From Source:

|

||||

Install Golang, minimum Golang 1.15 required.

|

||||

In go workspace: go get -u github.com/deroproject/derohe/...

|

||||

Check go workspace bin folder for binaries.

|

||||

For example on Linux machine following binaries will be created:

|

||||

derod-linux-amd64 -> DERO daemon.

|

||||

dero-wallet-cli-linux-amd64 -> DERO cmdline wallet.

|

||||

explorer-linux-amd64 -> DERO Explorer. Yes, DERO has prebuilt personal explorer also for advance privacy users.

|

||||

|

||||

Installation From Binary

|

||||

Download DERO binaries for ARM, INTEL, MAC platform and Windows, Mac, FreeBSD, OpenBSD, Linux etc. operating systems.

|

||||

https://github.com/deroproject/derohe/releases

|

||||

|

||||

2] ### Running DERO Daemon

|

||||

./derod-linux-amd64

|

||||

|

||||

3] ### Running DERO Wallet (Use local or remote daemon)

|

||||

./dero-wallet-cli-linux-amd64 --remote

|

||||

https://wallet.dero.io [Web wallet]

|

||||

|

||||

4] ### DERO Mining Quickstart

|

||||

Run miner with wallet address and no. of threads based on your CPU.

|

||||

./dero-miner --mining-threads 2 --daemon-rpc-address=http://testnetexplorer.dero.io:40402 --wallet-address deto1qxsplx7vzgydacczw6vnrtfh3fxqcjevyxcvlvl82fs8uykjkmaxgfgulfha5

|

||||

|

||||

NOTE: Miners keep your system clock sync with NTP etc.

|

||||

Eg on linux machine: ntpdate pool.ntp.org

|

||||

For details visit http://wiki.dero.io

|

||||

@ -1,26 +0,0 @@

|

||||

Copyright (c) 2020 DERO Foundation. All rights reserved.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without modification,

|

||||

are permitted provided that the following conditions are met:

|

||||

|

||||

1. Redistributions of source code must retain the above copyright notice,

|

||||

this list of conditions and the following disclaimer.

|

||||

|

||||

2. Redistributions in binary form must reproduce the above copyright notice,

|

||||

this list of conditions and the following disclaimer in the documentation

|

||||

and/or other materials provided with the distribution.

|

||||

|

||||

3. Neither the name of the copyright holder nor the names of its contributors

|

||||

may be used to endorse or promote products derived from this software without

|

||||

specific prior written permission.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

|

||||

ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

|

||||

LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

||||

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

||||

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

||||

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

||||

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE

|

||||

USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

@ -1,230 +0,0 @@

|

||||

package astrobwt

|

||||

|

||||

//import "fmt"

|

||||

import "strings"

|

||||

import "errors"

|

||||

import "sort"

|

||||

import "golang.org/x/crypto/sha3"

|

||||

import "encoding/binary"

|

||||

import "golang.org/x/crypto/salsa20/salsa"

|

||||

|

||||

// see here to improve the algorithms more https://github.com/y-256/libdivsufsort/blob/wiki/SACA_Benchmarks.md

|

||||

|

||||

// ErrInvalidSuffixArray means length of sa is not equal to 1+len(s)

|

||||

var ErrInvalidSuffixArray = errors.New("bwt: invalid suffix array")

|

||||

|

||||

// Transform returns Burrows–Wheeler transform of a byte slice.

|

||||

// See https://en.wikipedia.org/wiki/Burrows%E2%80%93Wheeler_transform

|

||||

func Transform(s []byte, es byte) ([]byte, error) {

|

||||

sa := SuffixArray(s)

|

||||

bwt, err := FromSuffixArray(s, sa, es)

|

||||

return bwt, err

|

||||

}

|

||||

|

||||

// InverseTransform reverses the bwt to original byte slice. Not optimized yet.

|

||||

func InverseTransform(t []byte, es byte) []byte {

|

||||

|

||||

le := len(t)

|

||||

table := make([]string, le)

|

||||

for range table {

|

||||

for i := 0; i < le; i++ {

|

||||

table[i] = string(t[i:i+1]) + table[i]

|

||||

}

|

||||

sort.Strings(table)

|

||||

}

|

||||

for _, row := range table {

|

||||

if strings.HasSuffix(row, "$") {

|

||||

return []byte(row[:le-1])

|

||||

}

|

||||

}

|

||||

return []byte("")

|

||||

|

||||

/*

|

||||

n := len(t)

|

||||

lines := make([][]byte, n)

|

||||

for i := 0; i < n; i++ {

|

||||

lines[i] = make([]byte, n)

|

||||

}

|

||||

|

||||

for i := 0; i < n; i++ {

|

||||

for j := 0; j < n; j++ {

|

||||

lines[j][n-1-i] = t[j]

|

||||

}

|

||||

sort.Sort(byteutil.SliceOfByteSlice(lines))

|

||||

}

|

||||

|

||||

s := make([]byte, n-1)

|

||||

for _, line := range lines {

|

||||

if line[n-1] == es {

|

||||

s = line[0 : n-1]

|

||||

break

|

||||

}

|

||||

}

|

||||

return s

|

||||

*/

|

||||

}

|

||||

|

||||

// SuffixArray returns the suffix array of s.

|

||||

func SuffixArray(s []byte) []int {

|

||||

_sa := New(s)

|

||||

var sa []int = make([]int, len(s)+1)

|

||||

sa[0] = len(s)

|

||||

for i := 0; i < len(s); i++ {

|

||||

sa[i+1] = int(_sa.sa.int32[i])

|

||||

}

|

||||

return sa

|

||||

}

|

||||

|

||||

// FromSuffixArray compute BWT from sa

|

||||

func FromSuffixArray(s []byte, sa []int, es byte) ([]byte, error) {

|

||||

if len(s)+1 != len(sa) || sa[0] != len(s) {

|

||||

return nil, ErrInvalidSuffixArray

|

||||

}

|

||||

bwt := make([]byte, len(sa))

|

||||

bwt[0] = s[len(s)-1]

|

||||

for i := 1; i < len(sa); i++ {

|

||||

if sa[i] == 0 {

|

||||

bwt[i] = es

|

||||

} else {

|

||||

bwt[i] = s[sa[i]-1]

|

||||

}

|

||||

}

|

||||

return bwt, nil

|

||||

}

|

||||

|

||||

func BWT(input []byte) ([]byte, int) {

|

||||

if len(input) >= maxData32 {

|

||||

panic("input too big to handle")

|

||||

}

|

||||

sa := make([]int32, len(input)+1)

|

||||

text_32(input, sa[1:])

|

||||

|

||||

bwt := make([]byte, len(input)+1)

|

||||

bwt[0] = input[len(input)-1]

|

||||

emarker := 0

|

||||

for i := 1; i < len(sa); i++ {

|

||||

if sa[i] == 0 {

|

||||

//bwt[i] = '$' //es

|

||||

emarker = i

|

||||

} else {

|

||||

bwt[i] = input[sa[i]-1]

|

||||

}

|

||||

|

||||

}

|

||||

//bwt[emarker] = '$'

|

||||

return bwt, emarker

|

||||

}

|

||||

|

||||

const stage1_length int = 147253 // it is a prime

|

||||

const MAX_LENGTH int = 1024*1024 + stage1_length + 1024

|

||||

|

||||

func POW(inputdata []byte) (outputhash [32]byte) {

|

||||

|

||||

var counter [16]byte

|

||||

|

||||

key := sha3.Sum256(inputdata)

|

||||

|

||||

var stage1 [stage1_length]byte // stages are taken from it

|

||||

var stage2 [1024*1024 + stage1_length + 1024]byte

|

||||

|

||||

salsa.XORKeyStream(stage1[:stage1_length], stage1[:stage1_length], &counter, &key)

|

||||

|

||||

stage1_result, eos := BWT(stage1[:stage1_length])

|

||||

|

||||

key = sha3.Sum256(stage1_result)

|

||||

|

||||

stage2_length := stage1_length + int(binary.LittleEndian.Uint32(key[:])&0xfffff)

|

||||

|

||||

for i := range counter { // will be optimized by compiler

|

||||

counter[i] = 0

|

||||

}

|

||||

|

||||

salsa.XORKeyStream(stage2[:stage2_length], stage2[:stage2_length], &counter, &key)

|

||||

|

||||

stage2_result, eos := BWT(stage2[:stage2_length])

|

||||

|

||||

// fmt.Printf("result %x stage2_length %d \n", key, stage2_length)

|

||||

copy(stage2_result[:], []byte("Broken for testnet"))

|

||||

key = sha3.Sum256(stage2_result)

|

||||

|

||||

//fmt.Printf("result %x\n", key)

|

||||

|

||||

copy(outputhash[:], key[:])

|

||||

|

||||

_ = eos

|

||||

return

|

||||

}

|

||||

|

||||

// input byte

|

||||

// sa should be len(input) + 1

|

||||

// result len len(input) + 1

|

||||

func BWT_0alloc(input []byte, sa []int32, bwt []byte) int {

|

||||

|

||||

//ix := &Index{data: input}

|

||||

if len(input) >= maxData32 {

|

||||

panic("input too big to handle")

|

||||

}

|

||||

if len(sa) != len(input)+1 {

|

||||

panic("invalid sa array")

|

||||

}

|

||||

if len(bwt) != len(input)+1 {

|

||||

panic("invalid bwt array")

|

||||

}

|

||||

//sa := make([]int32, len(input)+1)

|

||||

text_32(input, sa[1:])

|

||||

|

||||

//bwt := make([]byte, len(input)+1)

|

||||

bwt[0] = input[len(input)-1]

|

||||

emarker := 0

|

||||

for i := 1; i < len(sa); i++ {

|

||||

if sa[i] == 0 {

|

||||

//bwt[i] = '$' //es

|

||||

emarker = i

|

||||

} else {

|

||||

bwt[i] = input[sa[i]-1]

|

||||

}

|

||||

|

||||

}

|

||||

//bwt[emarker] = '$'

|

||||

return emarker

|

||||

}

|

||||

func POW_0alloc(inputdata []byte) (outputhash [32]byte) {

|

||||

|

||||

var counter [16]byte

|

||||

|

||||

var sa [MAX_LENGTH]int32

|

||||

// var bwt [max_length]int32

|

||||

|

||||

var stage1 [stage1_length]byte // stages are taken from it

|

||||

var stage1_result [stage1_length + 1]byte

|

||||

var stage2 [1024*1024 + stage1_length + 1]byte

|

||||

var stage2_result [1024*1024 + stage1_length + 1]byte

|

||||

|

||||

key := sha3.Sum256(inputdata)

|

||||

|

||||

salsa.XORKeyStream(stage1[:stage1_length], stage1[:stage1_length], &counter, &key)

|

||||

|

||||

eos := BWT_0alloc(stage1[:stage1_length], sa[:stage1_length+1], stage1_result[:stage1_length+1])

|

||||

|

||||

key = sha3.Sum256(stage1_result[:])

|

||||

|

||||

stage2_length := stage1_length + int(binary.LittleEndian.Uint32(key[:])&0xfffff)

|

||||

|

||||

for i := range counter { // will be optimized by compiler

|

||||

counter[i] = 0

|

||||

}

|

||||

|

||||

salsa.XORKeyStream(stage2[:stage2_length], stage2[:stage2_length], &counter, &key)

|

||||

|

||||

for i := range sa {

|

||||

sa[i] = 0

|

||||

}

|

||||

|

||||

eos = BWT_0alloc(stage2[:stage2_length], sa[:stage2_length+1], stage2_result[:stage2_length+1])

|

||||

_ = eos

|

||||

copy(stage2_result[:], []byte("Broken for testnet"))

|

||||

key = sha3.Sum256(stage2_result[:stage2_length+1])

|

||||

|

||||

copy(outputhash[:], key[:])

|

||||

return

|

||||

}

|

||||

@ -1,225 +0,0 @@

|

||||

package astrobwt

|

||||

|

||||

//import "os"

|

||||

//import "fmt"

|

||||

|

||||

import "sync"

|

||||

import "encoding/binary"

|

||||

import "golang.org/x/crypto/sha3"

|

||||

|

||||

import "golang.org/x/crypto/salsa20/salsa"

|

||||

|

||||

// see here to improve the algorithms more https://github.com/y-256/libdivsufsort/blob/wiki/SACA_Benchmarks.md

|

||||

|

||||

// Original implementation was in xmrig miner, however it had a flaw which has been fixed

|

||||

// this optimized algorithm is used only in the miner and not in the blockchain

|

||||

|

||||

//const stage1_length int = 147253 // it is a prime

|

||||

//const max_length int = 1024*1024 + stage1_length + 1024

|

||||

|

||||

type Data struct {

|

||||

stage1 [stage1_length + 64]byte // stages are taken from it

|

||||

stage1_result [stage1_length + 1]byte

|

||||

stage2 [1024*1024 + stage1_length + 1 + 64]byte

|

||||

stage2_result [1024*1024 + stage1_length + 1]byte

|

||||

indices [ALLOCATION_SIZE]uint64

|

||||

tmp_indices [ALLOCATION_SIZE]uint64

|

||||

}

|

||||

|

||||

var pool = sync.Pool{New: func() interface{} { return &Data{} }}

|

||||

|

||||

func POW_optimized_v1(inputdata []byte, max_limit int) (outputhash [32]byte, success bool) {

|

||||

data := pool.Get().(*Data)

|

||||

outputhash, success = POW_optimized_v2(inputdata, max_limit, data)

|

||||

pool.Put(data)

|

||||

return

|

||||

}

|

||||

func POW_optimized_v2(inputdata []byte, max_limit int, data *Data) (outputhash [32]byte, success bool) {

|

||||

|

||||

var counter [16]byte

|

||||

|

||||

for i := range data.stage1 {

|

||||

data.stage1[i] = 0

|

||||

}

|

||||

/* for i := range data.stage1_result{

|

||||

data.stage1_result[i] =0

|

||||

}*/

|

||||

|

||||

key := sha3.Sum256(inputdata)

|

||||

salsa.XORKeyStream(data.stage1[1:stage1_length+1], data.stage1[1:stage1_length+1], &counter, &key)

|

||||

sort_indices(stage1_length+1, data.stage1[:], data.stage1_result[:], data)

|

||||

key = sha3.Sum256(data.stage1_result[:])

|

||||

stage2_length := stage1_length + int(binary.LittleEndian.Uint32(key[:])&0xfffff)

|

||||

|

||||

if stage2_length > max_limit {

|

||||

for i := range outputhash { // will be optimized by compiler

|

||||

outputhash[i] = 0xff

|

||||

}

|

||||

success = false

|

||||

return

|

||||

}

|

||||

|

||||

for i := range counter { // will be optimized by compiler

|

||||

counter[i] = 0

|

||||

}

|

||||

|

||||

salsa.XORKeyStream(data.stage2[1:stage2_length+1], data.stage2[1:stage2_length+1], &counter, &key)

|

||||

sort_indices(stage2_length+1, data.stage2[:], data.stage2_result[:], data)

|

||||

|

||||

copy(data.stage2_result[:], []byte("Broken for testnet"))

|

||||

key = sha3.Sum256(data.stage2_result[:stage2_length+1])

|

||||

for i := range data.stage2 {

|

||||

data.stage2[i] = 0

|

||||

}

|

||||

|

||||

copy(outputhash[:], key[:])

|

||||

success = true

|

||||

return

|

||||

}

|

||||

|

||||

const COUNTING_SORT_BITS uint64 = 10

|

||||

const COUNTING_SORT_SIZE uint64 = 1 << COUNTING_SORT_BITS

|

||||

|

||||

const ALLOCATION_SIZE = MAX_LENGTH

|

||||

|

||||

func BigEndian_Uint64(b []byte) uint64 {

|

||||

_ = b[7] // bounds check hint to compiler; see golang.org/issue/14808

|

||||

return uint64(b[7]) | uint64(b[6])<<8 | uint64(b[5])<<16 | uint64(b[4])<<24 |

|

||||

uint64(b[3])<<32 | uint64(b[2])<<40 | uint64(b[1])<<48 | uint64(b[0])<<56

|

||||

}

|

||||

|

||||

func smaller(input []uint8, a, b uint64) bool {

|

||||

value_a := a >> 21

|

||||

value_b := b >> 21

|

||||

|

||||

if value_a < value_b {

|

||||

return true

|

||||

}

|

||||

|

||||

if value_a > value_b {

|

||||

return false

|

||||

}

|

||||

|

||||

data_a := BigEndian_Uint64(input[(a%(1<<21))+5:])

|

||||

data_b := BigEndian_Uint64(input[(b%(1<<21))+5:])

|

||||

return data_a < data_b

|

||||

}

|

||||

|

||||

// basically

|

||||

func sort_indices(N int, input_extra []byte, output []byte, d *Data) {

|

||||

|

||||

var counters [2][COUNTING_SORT_SIZE]uint32

|

||||

indices := d.indices[:]

|

||||

tmp_indices := d.tmp_indices[:]

|

||||

|

||||

input := input_extra[1:]

|

||||

|

||||

loop3 := N / 3 * 3

|

||||

for i := 0; i < loop3; i += 3 {

|

||||

k0 := BigEndian_Uint64(input[i:])

|

||||

counters[0][(k0>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]++

|

||||

counters[1][k0>>(64-COUNTING_SORT_BITS)]++

|

||||

k1 := k0 << 8

|

||||

counters[0][(k1>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]++

|

||||

counters[1][k1>>(64-COUNTING_SORT_BITS)]++

|

||||

k2 := k0 << 16

|

||||

counters[0][(k2>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]++

|

||||

counters[1][k2>>(64-COUNTING_SORT_BITS)]++

|

||||

}

|

||||

|

||||

if N%3 != 0 {

|

||||

for i := loop3; i < N; i++ {

|

||||

k := BigEndian_Uint64(input[i:])

|

||||

counters[0][(k>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]++

|

||||

counters[1][k>>(64-COUNTING_SORT_BITS)]++

|

||||

}

|

||||

}

|

||||

|

||||

/*

|

||||

for i := 0; i < N ; i++{

|

||||

k := BigEndian_Uint64(input[i:])

|

||||

counters[0][(k >> (64 - COUNTING_SORT_BITS * 2)) & (COUNTING_SORT_SIZE - 1)]++

|

||||

counters[1][k >> (64 - COUNTING_SORT_BITS)]++

|

||||

}

|

||||

*/

|

||||

|

||||

prev := [2]uint32{counters[0][0], counters[1][0]}

|

||||

counters[0][0] = prev[0] - 1

|

||||

counters[1][0] = prev[1] - 1

|

||||

var cur [2]uint32

|

||||

for i := uint64(1); i < COUNTING_SORT_SIZE; i++ {

|

||||

cur[0], cur[1] = counters[0][i]+prev[0], counters[1][i]+prev[1]

|

||||

counters[0][i] = cur[0] - 1

|

||||

counters[1][i] = cur[1] - 1

|

||||

prev[0] = cur[0]

|

||||

prev[1] = cur[1]

|

||||

}

|

||||

|

||||

for i := N - 1; i >= 0; i-- {

|

||||

k := BigEndian_Uint64(input[i:])

|

||||

// FFFFFFFFFFE00000 = (0xFFFFFFFFFFFFFFF<< 21) // to clear bottom 21 bits

|

||||

tmp := counters[0][(k>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]

|

||||

counters[0][(k>>(64-COUNTING_SORT_BITS*2))&(COUNTING_SORT_SIZE-1)]--

|

||||

|

||||

tmp_indices[tmp] = (k & 0xFFFFFFFFFFE00000) | uint64(i)

|

||||

}

|

||||

|

||||

for i := N - 1; i >= 0; i-- {

|

||||

data := tmp_indices[i]

|

||||

tmp := counters[1][data>>(64-COUNTING_SORT_BITS)]

|

||||

counters[1][data>>(64-COUNTING_SORT_BITS)]--

|

||||

indices[tmp] = data

|

||||

}

|

||||

|

||||

prev_t := indices[0]

|

||||

for i := 1; i < N; i++ {

|

||||

t := indices[i]

|

||||

if smaller(input, t, prev_t) {

|

||||

t2 := prev_t

|

||||

j := i - 1

|

||||

for {

|

||||

indices[j+1] = prev_t

|

||||

j--

|

||||

if j < 0 {

|

||||

break

|

||||

}

|

||||

prev_t = indices[j]

|

||||

if !smaller(input, t, prev_t) {

|

||||

break

|

||||

}

|

||||

}

|

||||

indices[j+1] = t

|

||||

t = t2

|

||||

}

|

||||

prev_t = t

|

||||

}

|

||||

|

||||

// optimized unrolled code below this comment

|

||||

/*for i := 0; i < N;i++{

|

||||

output[i] = input_extra[indices[i] & ((1 << 21) - 1) ]

|

||||

}*/

|

||||

|

||||

loop4 := ((N + 1) / 4) * 4

|

||||

for i := 0; i < loop4; i += 4 {

|

||||

output[i+0] = input_extra[indices[i+0]&((1<<21)-1)]

|

||||

output[i+1] = input_extra[indices[i+1]&((1<<21)-1)]

|

||||

output[i+2] = input_extra[indices[i+2]&((1<<21)-1)]

|

||||

output[i+3] = input_extra[indices[i+3]&((1<<21)-1)]

|

||||

}

|

||||

for i := loop4; i < N; i++ {

|

||||

output[i] = input_extra[indices[i]&((1<<21)-1)]

|

||||

}

|

||||

|

||||

// there is an issue above, if the last byte of input is 0x00, initialbytes are wrong, this fix may not be complete

|

||||

if N > 3 && input[N-2] == 0 {

|

||||

backup_byte := output[0]

|

||||

output[0] = 0

|

||||

for i := 1; i < N; i++ {

|

||||

if output[i] != 0 {

|

||||

output[i-1] = backup_byte

|

||||

break

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

@ -1,105 +0,0 @@

|

||||

package astrobwt

|

||||

|

||||

import "crypto/rand"

|

||||

import "strings"

|

||||

import "testing"

|

||||

import "encoding/hex"

|

||||

|

||||

// see https://www.geeksforgeeks.org/burrows-wheeler-data-transform-algorithm/

|

||||

func TestBWTTransform(t *testing.T) {

|

||||

|

||||

tests := []struct {

|

||||

input string

|

||||

bwt string

|

||||

}{

|

||||

{"BANANA", "ANNB$AA"}, // from https://www.geeksforgeeks.org/burrows-wheeler-data-transform-algorithm/

|

||||

{"abracadabra", "ard$rcaaaabb"},

|

||||

{"appellee", "e$elplepa"},

|

||||

{"GATGCGAGAGATG", "GGGGGGTCAA$TAA"},

|

||||

}

|

||||

for _, test := range tests {

|

||||

|

||||

input := "\x00" + test.input + "\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00"

|

||||

|

||||

var output = make([]byte, 64, 64)

|

||||

sort_indices(len(test.input)+1, []byte(input), output, &Data{})

|

||||

|

||||

output = output[:len(test.input)+1]

|

||||

output_s := strings.Replace(string(output), "\x00", "$", -1)

|

||||

|

||||

if output_s != test.bwt {

|

||||

t.Errorf("Test failed: Transform %s %s %x", output_s, test.bwt, output)

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

func TestPOW_optimized_v1(t *testing.T) {

|

||||

p := POW([]byte{0, 0, 0, 0})

|

||||

p0 := POW_0alloc([]byte{0, 0, 0, 0})

|

||||

p_optimized, _ := POW_optimized_v1([]byte{0, 0, 0, 0}, MAX_LENGTH)

|

||||

if string(p[:]) != string(p0[:]) {

|

||||

t.Error("Test failed: POW and POW_0alloc returns different ")

|

||||

}

|

||||

if string(p[:]) != string(p_optimized[:]) {

|

||||

t.Error("Test failed: POW and POW_rewrite returns different ")

|

||||

}

|

||||

|

||||

for i := 20; i < 200; i++ {

|

||||

buf := make([]byte, 20, 20)

|

||||

rand.Read(buf)

|

||||

|

||||

p := POW(buf)

|

||||

p0 := POW_0alloc(buf)

|

||||

p_optimized, _ := POW_optimized_v1(buf, MAX_LENGTH)

|

||||

if string(p[:]) != string(p0[:]) {

|

||||

t.Errorf("Test failed: POW and POW_0alloc returns different for i=%d buf %x", i, buf)

|

||||

}

|

||||

if string(p[:]) != string(p_optimized[:]) {

|

||||

t.Errorf("Test failed: POW and POW_rewrite returns different for i=%d buf %x", i, buf)

|

||||

}

|

||||

|

||||

}

|

||||

}

|

||||

|

||||

func TestPOW_optimized_v1Tests(t *testing.T) {

|

||||

tests := []struct {

|

||||

input string

|

||||

}{

|

||||

{"57b84420d2028aef8a05b42ea893a8c4f2219f73"},

|

||||

{"67d49bd1c53645ec96c50230083e55b120b5005ffbaf4b2f"},

|

||||

{"67d49bd1c53645ec96c50230183e55b120b5005ffbaf4b2f"},

|

||||

{"3fe7baa520edb5d0b43b7a6999c146262b2c7f26e030fd7c256611262db727833a40a79f9988"},

|

||||

{"ed32ea6f1c0ee2514eb73f6a0f9f00d1e2c2392a8896963eefddfd6600c105d52db6e93ba98f8454433894293eaa9f31973658c49f67f3361af70fac27bac6f8f5c69f52be9d7c86a5fea3e5b5d99d8f73888b4d4a7dbb28169035583632ef26604e472eb26a5da9da4e95f80460dfc7b788e8ee75194a7d2f1190a788f92e98cb83fd4c63d9976ec06c2df005d321baed360599af58ff45aa63b00261ea60b5adf623f256bfbc75da961c5960db68e8"},

|

||||

{"7cf76f0d4072574bae246c4f7184000af5ce818943605151a73a49d7b704c127891e6e7008c331fa41776540b0db3b2ea2c187e119191adde6b0f5438fb48cc242c02420f44d070ef4c87a00952560f2ffcc5ac5932c5a0f40df9029ddc10d29b23ff4150fbe0dda5b14a73eadd90a3b6eaf049075b89c1c16da33f049c3235f158c"},

|

||||

{"fbaf4f7ebec36c97f8994e67e74b281960846e6b5ce30e4fd95ce68d8875e19ab3ebf716e5887adb6eefbc3c5ca6096f936643f4bf22a9f61a1e35b019cfaabfe331ad2897a3b70bd6846c5003a999719d26246796a1d60b18bf89bdf4f5fea3b976ad7739e00089f7f11a5833351515e330d8580f918ea694a438f384946cdae0d9d3ccda33bc6de1a64d6c25c0b3f7d905172956"},

|

||||

{"ff7f99a16b3e2c0f9daa2a44c9a364b212d836ba57f8d9b0e050490d1e74"},

|

||||

{"f299d507d916e67f93345a42042e859170eb755262355826fcb7ed0d2e9c999bb21662275d1b99a53b397bf77f4e2af38a41358c41e9ecd750f3cc2859a3fef8a9ef9c189b7489fb0048903cfe78f5171f2476f86aae2346e5390740b09bb185268af16146ccab9876d8931f670f9ba93805f0277a3cba0fc9671cc78ac53ce60f538c7aa616660e3ca1e1eabf8938c095baeacb4ce11889c52ce63b9511d2f176d563a75a34418fddcb4e712a5936e4f72a2269b423954dadcf"},

|

||||

}

|

||||

|

||||

for i, test := range tests {

|

||||

|

||||

buf, err := hex.DecodeString(test.input)

|

||||

if err != nil {

|

||||

t.Error(err)

|

||||

}

|

||||

|

||||

p := POW(buf)

|

||||

p0 := POW_0alloc(buf)

|

||||

p_optimized, _ := POW_optimized_v1(buf, MAX_LENGTH)

|

||||

if string(p[:]) != string(p0[:]) {

|

||||

t.Errorf("Test failed: POW and POW_0alloc returns different for i=%d buf %x", i, buf)

|

||||

}

|

||||

if string(p[:]) != string(p_optimized[:]) {

|

||||

t.Errorf("Test failed: POW and POW_optimized returns different for i=%d buf %x", i, buf)

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

func BenchmarkPOW_optimized_v1(t *testing.B) {

|

||||

for i := 0; i < t.N; i++ {

|

||||

rand.Read(cases[0][:])

|

||||

_, _ = POW_optimized_v1(cases[0][:], MAX_LENGTH)

|

||||

}

|

||||

}

|

||||

@ -1,121 +0,0 @@

|

||||

package astrobwt

|

||||

|

||||

import "math/rand"

|

||||

import "testing"

|

||||

|

||||

// see https://www.geeksforgeeks.org/burrows-wheeler-data-transform-algorithm/

|

||||

func TestBWTAndInverseTransform(t *testing.T) {

|

||||

|

||||

tests := []struct {

|

||||

input string

|

||||

bwt string

|

||||

}{

|

||||

{"BANANA", "ANNB$AA"}, // from https://www.geeksforgeeks.org/burrows-wheeler-data-transform-algorithm/

|

||||

{"abracadabra", "ard$rcaaaabb"},

|

||||

{"appellee", "e$elplepa"},

|

||||

{"GATGCGAGAGATG", "GGGGGGTCAA$TAA"},

|

||||

{"abcdefg", "g$abcdef"},

|

||||

}

|

||||

|

||||

for _, test := range tests {

|

||||

trans2, eos := BWT([]byte(test.input))

|

||||

trans2[eos] = '$'

|

||||

|

||||

if string(trans2) != test.bwt {

|

||||

t.Errorf("Test failed: Transform %s", test.input)

|

||||

}

|

||||

if string(InverseTransform([]byte(trans2), '$')) != test.input {

|

||||

t.Errorf("Test failed: InverseTransform expected '%s' actual '%s`", test.input, string(InverseTransform([]byte(trans2), '$')))

|

||||

}

|

||||

|

||||

p := POW([]byte(test.input))

|

||||

p0 := POW_0alloc([]byte(test.input))

|

||||

|

||||

if string(p[:]) != string(p0[:]) {

|

||||

t.Error("Test failed: difference between pow and pow_0alloc")

|

||||

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

func TestFromSuffixArray(t *testing.T) {

|

||||

s := "GATGCGAGAGATG"

|

||||

trans := "GGGGGGTCAA$TAA"

|

||||

|

||||

sa := SuffixArray([]byte(s))

|

||||

B, err := FromSuffixArray([]byte(s), sa, '$')

|

||||

if err != nil {

|

||||

t.Error("Test failed: FromSuffixArray error")

|

||||

}

|

||||

if string(B) != trans {

|

||||

t.Error("Test failed: FromSuffixArray returns wrong result")

|

||||

}

|

||||

}

|

||||

|

||||

func TestPow_Powalloc(t *testing.T) {

|

||||

|

||||

p := POW([]byte{0, 0, 0, 0})

|

||||

p0 := POW_0alloc([]byte{0, 0, 0, 0})

|

||||

if string(p[:]) != string(p0[:]) {

|

||||

t.Error("Test failed: POW and POW_0alloc returns different ")

|

||||

}

|

||||

}

|

||||

|

||||

var cases [][]byte

|

||||

|

||||

func init() {

|

||||

rand.Seed(1)

|

||||

alphabet := "abcdefghjijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ01234567890"

|

||||

n := len(alphabet)

|

||||

_ = n

|

||||

scales := []int{100000}

|

||||

cases = make([][]byte, len(scales))

|

||||

for i, scale := range scales {

|

||||

l := scale

|

||||

buf := make([]byte, int(l))

|

||||

for j := 0; j < int(l); j++ {

|

||||